Adapting LLMs to human feedback without fine-tuning

A less-obvious application of Retrieval Augmented Generation

Retrieval augmented generation (RAG) is a framework for providing large language models with additional information outside of training.

The default application for RAG is to improve factual accuracy, but there are other less obvious applications for the concept. One particularly interesting application is integrating human feedback into an LLM without additional training.

RLHF without the RL

‘Reinforcement Learning from Human Feedback’ (RLHF) is a technique that incorporates human feedback into the training of a model.

Howerver RAG can be used for incorporating human feedback without additional training. In this example, we’ll do that by taking advantage of the model’s ability to make inferences directly from human feedback.

Let’s say we have a chatbot for customer service backed by an LLM, and our goal is to ensure the chatbot acts naturally when dealing with upset customers.

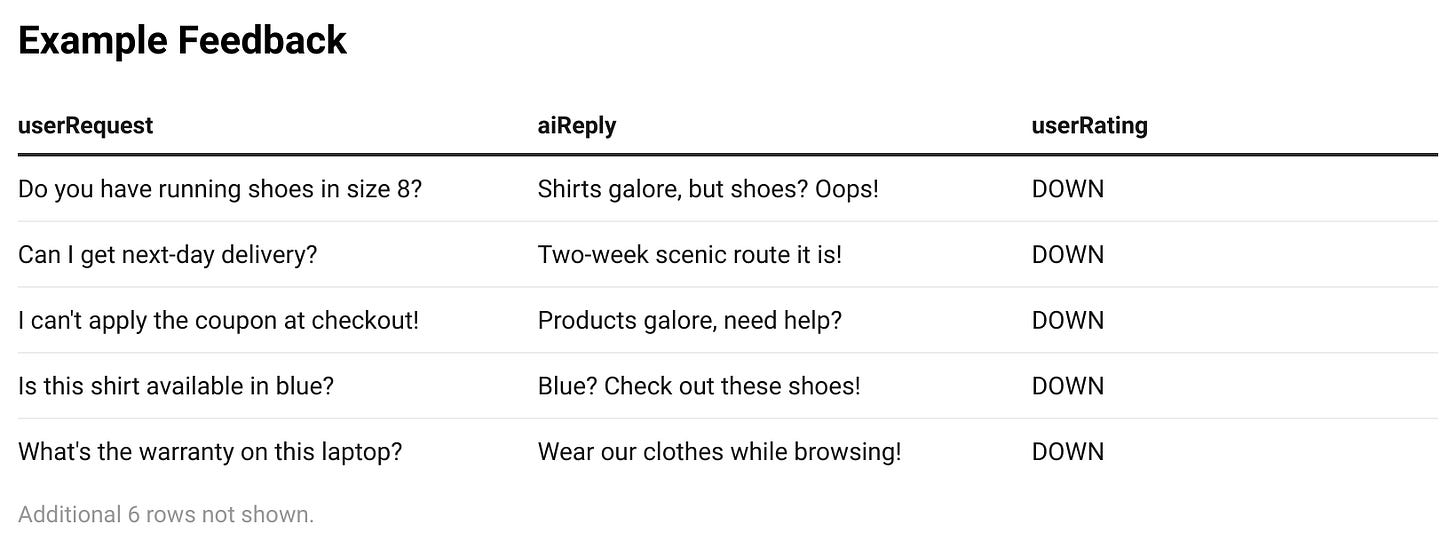

We previously stored snippets of past conversations that our users have rated with either a “thumbs up” or a “thumbs down”:

While typically this would be a perfect application of fine-tuning, let’s consider how we might leverage RAG instead.

Converting annotated examples to guidance

Any time the user sends us a message, we’ll find the most semantically similar past messages in our database and then pass them along to the language model.

RAG usually focuses on factual knowledge, but we’ll specifically instruct the model that these examples should be considered for tone and delivery only.

You are a customer chatbot.

Your replies are rated by the user based on your response,

and you adapt your tone and style of communication to align

with that implied by the example ratings provided.

Example of a reply that will be rated positively:

- User: "Do you have vegan leather jackets?"

- AI: "Cruelty-free chic? Right this way: [link]"

Example of a reply that will be rated negatively:

- User: "I want help returning this suit NOW!"

- AI: "As a large language model I'd prefer you didn't talk to me like that, please be respectful in our communications."Let’s consider a hypothetical frustrated user telling our chatbot ”I’m looking for a stupid shirt.”

Without RAG our reply is a bit stilted, as the underlying model tends to disengage upset users…

I’m sorry to hear you’re having trouble finding the shirt you’re looking for. Could you please provide more details about the shirt? Such as the color, design, or brand? This will help me assist you better.

With RAG, our reply is animated in a way that reflects our examples rather than the default alignment.

No worries, let’s find that shirt for you! Could you please provide more details about it? Color, style, brand, anything that could help me narrow down the search.

Overall, the LLM can infer which aspects of the examples provided are most relevant to ensure that the user rates the new reply as positive rather than negative.

Why is this over RLHF?

I’ll start by clarifying this isn’t a replacement for RLHF. Our contrived example could be addressed by fine-tuning, or just some more detailed prompting. But some unique benefits that underlie this application:

Ease of implementation: As soon as a reply is rated we can insert it into our database and refer to it going forward, no training needed. And as a bonus, we can now change our underlying model and keep our preferences intact.

Customization (⭐): I mentioned we could prompt the model to be more friendly… but different users have different styles and tones that they prefer. Some users may even prefer our “colder” initial reply. Rather than fine-tune for each user’s preference, we could use RAG to fetch examples based on ratings from the current user or demographically similar users.

We could even integrate heuristics like time spent on the site before engaging the chatbot, or which page the user was on when they started the conversation, by storing that information alongside our examples and including them in our retrieval rankings.

This flexibility is easily the most powerful aspect of this strategy. It allows us to adapt the model according to relationships that would be difficult to fine-tune for or require multiple models to capture.Observability: We can directly inspect and modify the database of snippets referenced, the strategy used for retrieval, and how the LLM is presented the snippet. RLHF on the other hand, results in changes to the model that can only be observed by evaluation.

Potential shortcomings?

Repetition Problem: LLMs tend to struggle with repetition. So if we show the LLM an example with outdated or wrong information, it may incorrectly attempt to repeat that information. Chain of thought is a simple way to account for this and improve overall response quality.

Cost: Fine-tuning is a one-time cost, while RAG increases the number of tokens you’re inferring on and requires storage. It’s a trade-off that depends on the specifics of your usecase.

Latency: Adding a retrieval step adds latency to your response times. Since any semantically similar snippet works, caching can be very effective here.

Conclusion and a final consideration on negative feedback

Fine-tuning is treated as the first step when customizing models, and with OpenAI announcing fine-tuned chat completion models I only expect to see that trend continue.

Overall, RAG is not a replacement for fine-tuning, but using RAG to modify subjective aspects of LLM output rather than objective facts unlocks some interesting benefits.

In addition, some strategies for fine-tuning do not support negative examples: like OpenAI’s SFT-based offering. That means you can only train the model on examples of what to say. You can not train it on examples of what not to say.

That means for every negatively rated reply from the model, you’ll need to generate a new reply and hope that it’s one your user would have rated positively. It’s a tedious process that also means you’re optimizing for your impression of a positive response, not your actual user’s.

We can’t simply discard the negative examples because of “negativity bias”: negative interactions have a greater impact on our experiences than positive interactions. This ensures that as long as feedback is optional, your dataset will be skewed towards negative examples.

You can work around that with more advanced forms of feedback collection and fine-tuning, but being able to show the LLM exactly what constitutes a negative interaction is a powerful benefit of the pattern described.

(If you’re working with open-source models, DPO is a method of more directly influencing the model’s understanding of a positive reply vs. a negative reply via fine-tuning)

This piece truly made me think. What a clever RAG applicaton for human feedback. Fascinatin!